diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 98ac38632..e6ae17071 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -57,6 +57,11 @@ but in some OS (like MacOS) that would require installing additional libs ([this

After installation, you should be able to run both tests and the library components without issues.

+### Workflows

+

+The Workflows Execution Engine requires a specialized approach to development. The proposed strategy is outlined

+[here](https://inference.roboflow.com/workflows/create_workflow_block/)

+

## 🐳 Building docker image with inference server

To test the changes related to `inference` server, you would probably need to build docker image locally.

@@ -73,6 +78,17 @@ repo_root$ docker build -t roboflow/roboflow-inference-server-cpu:dev -f docker/

repo_root$ docker build -t roboflow/roboflow-inference-server-gpu:dev -f docker/dockerfiles/Dockerfile.onnx.gpu .

```

+Running the container is possible using the following command:

+

+```bash

+inference_repo$ docker run -p 9001:9001 \

+ -v ./inference:/app/inference \

+ roboflow/roboflow-inference-server-cpu:dev

+```

+

+Running the command from `inference` repository root and mounting the volume is helpful for development as you

+can build the image once and then run container iteratively improving the code.

+

## 🧹 Code quality

We provide two handy commands inside the `Makefile`, namely:

@@ -93,12 +109,16 @@ We would like all low-level components to be covered with unit tests. That tests

* deterministic (not flaky)

* covering all [equivalence classes](https://piketec.com/testing-with-equivalence-classes/#:~:text=Testing%20with%20equivalence%20classes&text=Equivalence%20classes%20in%20the%20test,class%20you%20use%20as%20input.)

+Our test suites are organized to target different areas of the codebase, so when working on a specific feature, you

+typically only need to focus on the relevant part of the test suite.

+

Running the unit tests:

```bash

repo_root$ (inference-development) pytest tests/inference/unit_tests/

repo_root$ (inference-development) pytest tests/inference_cli/unit_tests/

repo_root$ (inference-development) pytest tests/inference_sdk/unit_tests/

+repo_root$ (inference-development) pytest tests/workflows/unit_tests/

```

With GH Actions defined in `.github` directory, the ones related to integration tests at `x86` platform

@@ -113,6 +133,9 @@ Those should check specific functions e2e, including communication with external

real service cannot be used for any reasons). Integration tests may be more bulky than unit tests, but we wish them

not to require burning a lot of resources, and be completed within max 20-30 minutes.

+Similar to unit tests, integration tests are organized into different suites—each focused on a specific part

+of the `inference` library.

+

Running the integration tests locally is possible, but only in some cases. For instance, one may locally run:

```bash

repo_root$ (inference-development) pytest tests/inference/models_predictions_tests/

@@ -122,6 +145,7 @@ repo_root$ (inference-development) pytest tests/inference_cli/integration_tests/

But running

```bash

repo_root$ (inference-development) pytest tests/inference/integration_tests/

+repo_root$ (inference-development) pytest tests/workflows/integration_tests/

```

will not be fully possible, as part of them require API key for Roboflow API.

@@ -130,6 +154,54 @@ will not be fully possible, as part of them require API key for Roboflow API.

It would be a great contribution to make `inference` server integration tests running without API keys for Roboflow.

+### 🚧 Testing requirements external contributions 🚦

+

+We utilize secrets API keys and custom GitHub Actions runners in our CI pipeline, which may result in the majority of

+CI actions failing when submitting a pull request (PR) from a forked repository. While we are working to improve this

+experience, you should not worry too much, as long as you have tested the following locally:

+

+#### For changes in the core of `inference`:

+

+- Add new tests to `tests/inference/unit_tests`.

+

+- Ensure that you can run `pytest tests/inference/unit_tests` without errors.

+

+- When adding a model, include tests with example model inference in `tests/inference/models_predictions_tests`,

+and run `pytest tests/inference/models_predictions_tests/test_{{YOUR_MODEL}}.py` to confirm that the tests pass.

+

+#### For changes to the `inference` server:

+

+- Add tests to `tests/inference/integration_tests/`.

+

+- Build the inference server CPU locally and run it (see commands above).

+

+- Run your tests against the server using: `pytest tests/inference/integration_tests/test_{{YOUR-TEST-MODULE}}`.

+

+

+#### For changes in `inference-cli`:

+

+- Add tests for your changes in `tests/inference_cli`.

+

+- Run `pytest tests/inference_cli` without errors.

+

+

+#### For changes in `inference-sdk`:

+

+- Add tests to `tests/inference_sdk`.

+

+- Run pytest `tests/inference_sdk without` errors.

+

+

+#### For changes related to Workflows:

+

+- Add tests to `tests/workflows/unit_tests` and `tests/workflows/integration_tests`.

+

+- Run `pytest tests/workflows/unit_tests` without errors

+

+- Run `pytest tests/workflows/integration_tests/execution/test_workflow_with_{{YOUR-BLOCK}}` without errors

+

+Please refer to the details [here](https://inference.roboflow.com/workflows/create_workflow_block/#environment-setup).

+

## 📚 Documentation

Roboflow Inference uses mkdocs and mike to offer versioned documentation. The project documentation is hosted on [GitHub Pages](https://inference.roboflow.com).

diff --git a/docs/workflows/create_workflow_block.md b/docs/workflows/create_workflow_block.md

index 91ab5d5fa..7784a2c35 100644

--- a/docs/workflows/create_workflow_block.md

+++ b/docs/workflows/create_workflow_block.md

@@ -24,39 +24,91 @@ its inputs and outputs

As you will soon see, creating a Workflow block is simply a matter of defining a Python class that implements

a specific interface. This design allows you to run the block using the Python interpreter, just like any

other Python code. However, you may encounter difficulties when assembling all the required inputs, which would

-normally be provided by other blocks during Workflow execution.

+normally be provided by other blocks during Workflow execution. Therefore, it's important to set up the development

+environment properly for a smooth workflow. We recommend following these steps as part of the standard development

+process (initial steps can be skipped for subsequent contributions):

-While it is possible to run your block during development without executing it via the Execution Engine,

-you will likely need to run end-to-end tests in the final stages. This is the most straightforward way to

-validate the functionality.

+1. **Set up the `conda` environment** and install main dependencies of `inference`, as described in

+[`inference` contributor guide](https://github.com/roboflow/inference/blob/main/CONTRIBUTING.md).

-To get started, build the inference server from your branch

-```bash

-inference_repo$ docker build \

- -t roboflow/roboflow-inference-server-cpu:test \

- -f docker/dockerfiles/Dockerfile.onnx.cpu .

-```

+2. **Familiarize yourself with the organization of the Workflows codebase.**

+

+ ??? "Workflows codebase structure - cheatsheet"

+

+ Below are the key packages and directories in the Workflows codebase, along with their descriptions:

+

+ * `inference/core/workflows` - the main package for Workflows.

+

+ * `inference/core/workflows/core_steps` - contains Workflow blocks that are part of the Roboflow Core plugin. At the top levels, you'll find block categories, and as you go deeper, each block has its own package, with modules hosting different versions, starting from `v1.py`

+

+ * `inference/core/workflows/execution_engine` - contains the Execution Engine. You generally won’t need to modify this package unless contributing to Execution Engine functionality.

+

+ * `tests/workflows/` - the root directory for Workflow tests

+

+ * `tests/workflows/unit_tests/` - suites of unit tests for the Workflows Execution Engine and core blocks. This is where you can test utility functions used in your blocks.

+

+ * `tests/workflows/integration_tests/` - suites of integration tests for the Workflows Execution Engine and core blocks. You can run end-to-end (E2E) tests of your workflows in combination with other blocks here.

+

+

+3. **Create a minimalistic block** – You’ll learn how to do this in the following sections. Start by implementing a simple block manifest and basic logic to ensure the block runs as expected.

+

+4. **Add the block to the plugin** – Once your block is created, add it to the list of blocks exported from the plugin. If you're adding the block to the Roboflow Core plugin, make sure to add an entry for your block in the

+[loader.py](https://github.com/roboflow/inference/blob/main/inference/core/workflows/core_steps/loader.py). **If you forget this step, your block won’t be visible!**

+

+5. **Iterate and refine your block** – Continue developing and running your block until you’re satisfied with the results. The sections below explain how to iterate on your block in various scenarios.

+

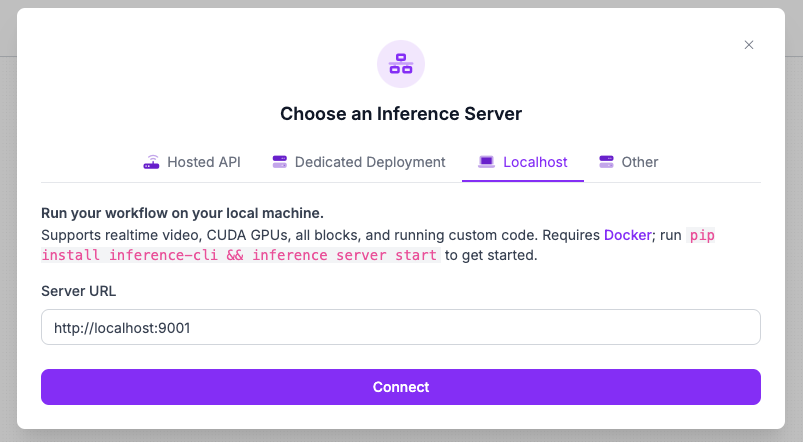

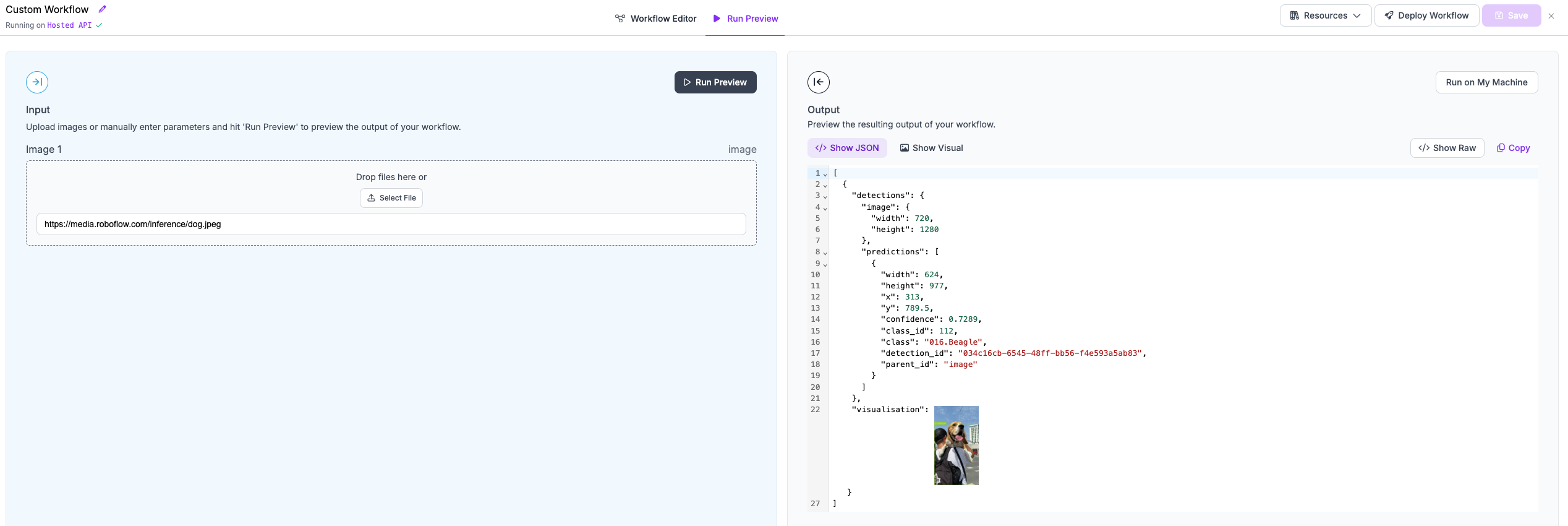

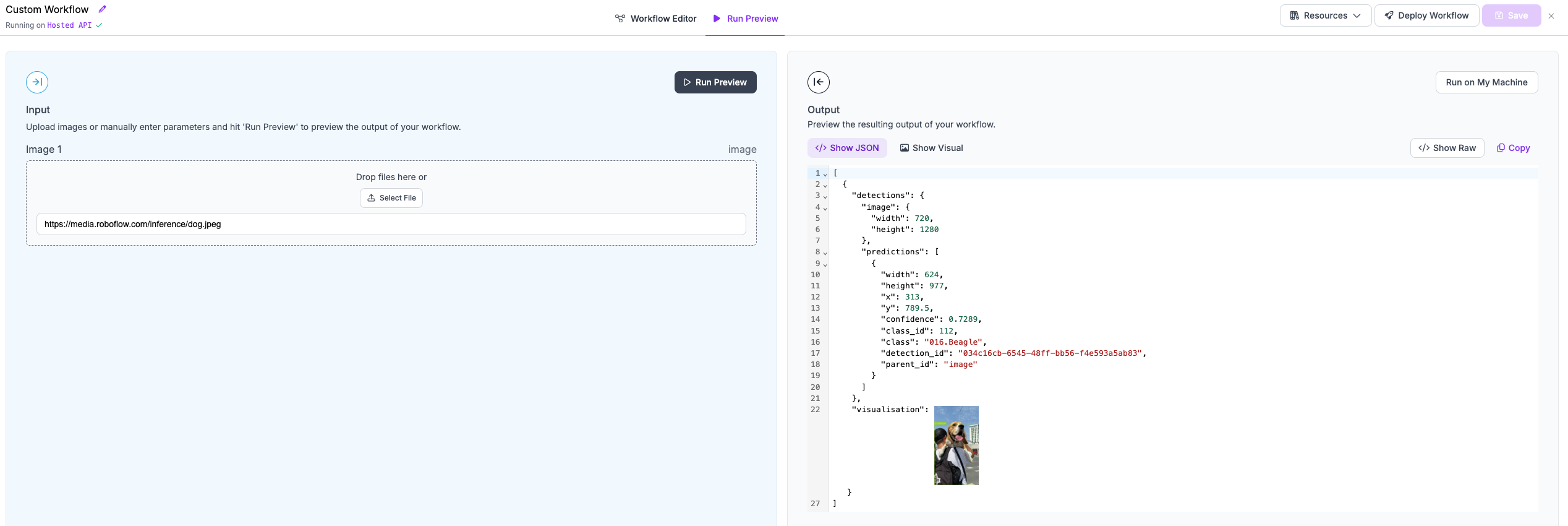

+### Running your blocks using Workflows UI

+

+We recommend running the inference server with a mounted volume (which is much faster than re-building `inference`

+server on each change):

-Run docker image mounting your code as volume

```bash

inference_repo$ docker run -p 9001:9001 \

- -v ./inference:/app/inference \

- roboflow/roboflow-inference-server-cpu:test

+ -v ./inference:/app/inference \

+ roboflow/roboflow-inference-server-cpu:latest

```

-

-Connect your local server to Roboflow UI

+and connecting your local server to Roboflow UI: